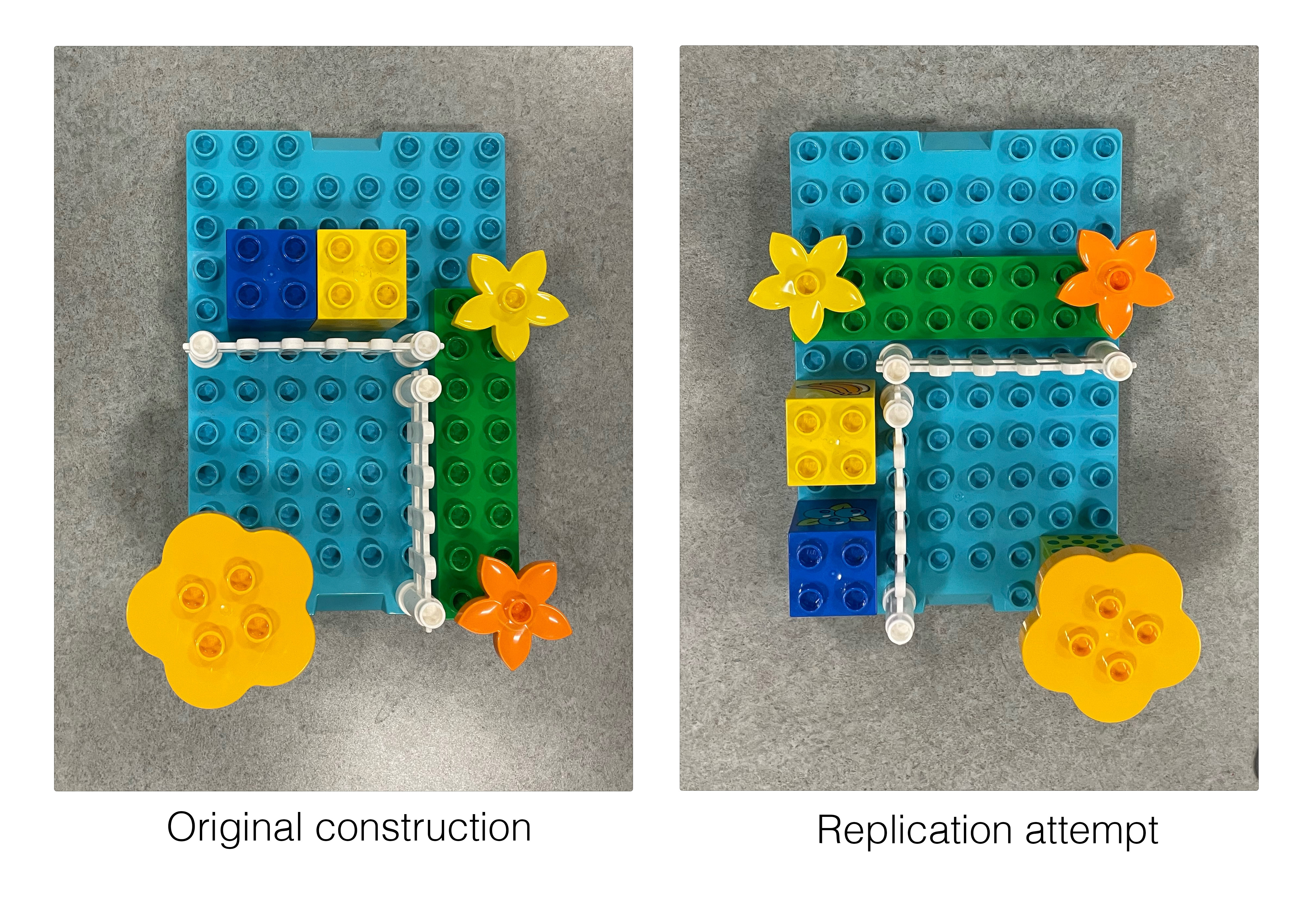

Picture students divided into groups in a classroom, each group intently focused on a pile of Lego® building bricks. You’re probably picturing children at an elementary school, but in this particular case, they are graduate students at the Bren School of Environmental Science & Management at UC Santa Barbara. Their task? To build a fantasy creation of their liking, with the proviso that they must describe their construction, in writing, in a way that it can be replicated. After a few minutes, the groups disassemble their creations, rotate positions, and attempt to recreate each other’s constructions based on the written instructions. If they’re successful, the same creations will emerge. But if not—and indeed, they often do not—hilarity ensues, and therein lies the learning lesson.

This variation on the age-old children’s game of “telephone”—can a message be communicated through a chain of people intact?—is actually a serious exercise in understanding the challenges and requirements of making research replicable. Based on The Lego® Metadata for Reproducibility game pack, it is part of a new annual training provided to Bren students by Dr. Julien Brun, the Earth & Environmental Sciences Research Facilitator in UCSB Library’s Research Data Services (RDS) department. The training includes instruction in open science principles and research data management practices, helping students to meet their capstone projects’ requirements of scientific reproducibility and data preservation.

Students working through the Lego exercise often discover the need for some type of coordinate system to describe the position and orientation of the pieces. (If a brick is “to the left” of another brick, from whose perspective?) And they quickly come to appreciate the value of having a shared, standardized terminology for describing the many and subtly different types of bricks. Even with those realizations, though, it can still be challenging for a group of people viewing something together to recognize the entirety of what must be communicated to future readers, and to anticipate what may be prone to misinterpretation.

Students working through the Lego exercise often discover the need for some type of coordinate system to describe the position and orientation of the pieces. (If a brick is “to the left” of another brick, from whose perspective?) And they quickly come to appreciate the value of having a shared, standardized terminology for describing the many and subtly different types of bricks. Even with those realizations, though, it can still be challenging for a group of people viewing something together to recognize the entirety of what must be communicated to future readers, and to anticipate what may be prone to misinterpretation.

The analogies between the Lego exercise and science quickly become evident to the Bren students. The now widely adopted principles of open science demand transparency in all facets of the scientific method: transparency of citation and sources, of methods and code, of research materials, of design and analysis, of outputs. Such transparency often takes the form of documentation that accompanies publications, data, and code and that is stored in publicly accessible repositories. A major challenge of creating that documentation is taking knowledge that is intrinsic and implicit, and that is often built up and shared within projects, labs, and scientific communities, and making it explicit and manifest.

“Communicating one's method takes effort, but it is an investment with a great return on productivity by enabling the students to reuse their own work,” says Dr. Brun. “It is also a cornerstone of modern science practice as increasingly interdisciplinary science means that data and methods can be reused by researchers and communities never originally intended or envisioned.”

RDS helps students, faculty, and researchers in the Bren School—and in all other schools and colleges at UCSB—use technologies that facilitate the recording and communication of methodology. These include:

- Metadata standards, which define and standardize how data is described. One can always describe a dataset using narrative prose, but there is considerable value in using a predefined set of fields, both for increased uniformity and to remind researchers what can and should be described. Many metadata standards have been developed. Some aim for shallow but broad applicability (e.g., one of the most widely adopted standards, Dublin Core, focuses on common fields such as Title, Creator, and Publisher) while others address the needs of particular data types and research communities.

- Controlled vocabularies, which standardize terms and their meanings. Terminology is notoriously difficult to agree upon, even within scientific communities, but certainly across communities. As with metadata standards, many vocabularies have been developed, and where they overlap, so-called “crosswalks” (mappings) have been developed. The value of a vocabulary such as the Climate and Forecast (CF) Metadata Conventions, to take but one example, is that it enumerates and defines terms such as “tidal sea surface height above mean sea level,” giving a commonly referenced quantity a standardized name, a unit of measure, and a definition, as well as distinguishing it from other, similar terms.

- Formal research protocols, which provide uniform, machine-readable ways of describing procedural steps and the materials (reagents, specimen lines, etc.) and instrumentation needed to carry out those steps. By utilizing a formal framework, protocols can be published, cited, reused, and collaborated on. UCSB subscribes to the protocols.io service for this purpose.

- Persistent identifier (PID) systems, which aim to prevent broken links and which provide the foundation for citability in scholarly communication. So-called “PIDs” are used to globally, uniquely, and persistently identify everything from documents to data, to code and methods, to instruments and samples.

- Computational notebook platforms, such as Jupyter and RStudio, and version control systems, such as git and GitHub, which both formalize and facilitate the maintenance of documentation and project history and provide new opportunities for melding research and publications.

All these technologies aim to reduce the burden of describing methodology, reduce ambiguity, facilitate communication, and ultimately improve the reusability of past work and the reproducibility of results. If you can do it for Legos, you can do it for science!

Further reading

- B. A. Nosek et al (2015). Promoting an open research culture. Science 348:1422-1425. https://doi.org/10.1126/science.aab2374

- Fiona Fidler and John Wilcox (2021). Reproducibility of Scientific Results. The Stanford Encyclopedia of Philosophy, Edward N. Zalta (ed.). https://plato.stanford.edu/archives/sum2021/entries/scientific-reproducibility